By David Goddard. Photography by Shawn Poynter.

Cameras come in all shapes and sizes, and they serve a wide assortment of needs.

A scientist in a lab might need to shoot at incredibly miniscule levels, while a wedding photographer needs to capture the emotions of the day at varying scales.

Someone shooting the night sky or a running river might want a slow shutter speed to show the change of time, while a red-light camera needs to quickly snap the license plate of an offending driver.

Thanks to a project with connections to UT’s Min H. Kao Department of Electrical Engineering and Computer Science, that time frame will have the potential to become much faster and more sensitive.

The key? Neuromorphic computing.

“If you equip event-based cameras with neuro sensors, you can really ramp up the response time by several measures,” said Professor James Plank. “That is an important, sought out quality that gives them relevance in a variety of fields.”

The UT team—Plank serves as principal investigator on the project, with Professor Garrett Rose and Assistant Professor Catherine Schuman as co-PIs—is working on the project through a Department of Defense Small Business Technology Transfer Program grant with Timothy Klein via Areté, a cutting-edge research center in Huntsville, Alabama.

Their part of the project is to bring the expertise they have in neuromorphic computing into cameras and detection devices in a way that makes them highly sensitive and able to detect the slightest change in what they are viewing.

“There are several limitations to traditional cameras and detection that neuromorphic computing can help improve or even solve,” said Rose. “Neuromorphic computing, by design, is meant to mimic the firing of the neural network of the brain, one of the best ‘computers’ that we have.”

Resolution isn’t as much a concern as the ability to immediately flag an event or anomaly the millisecond it occurs.

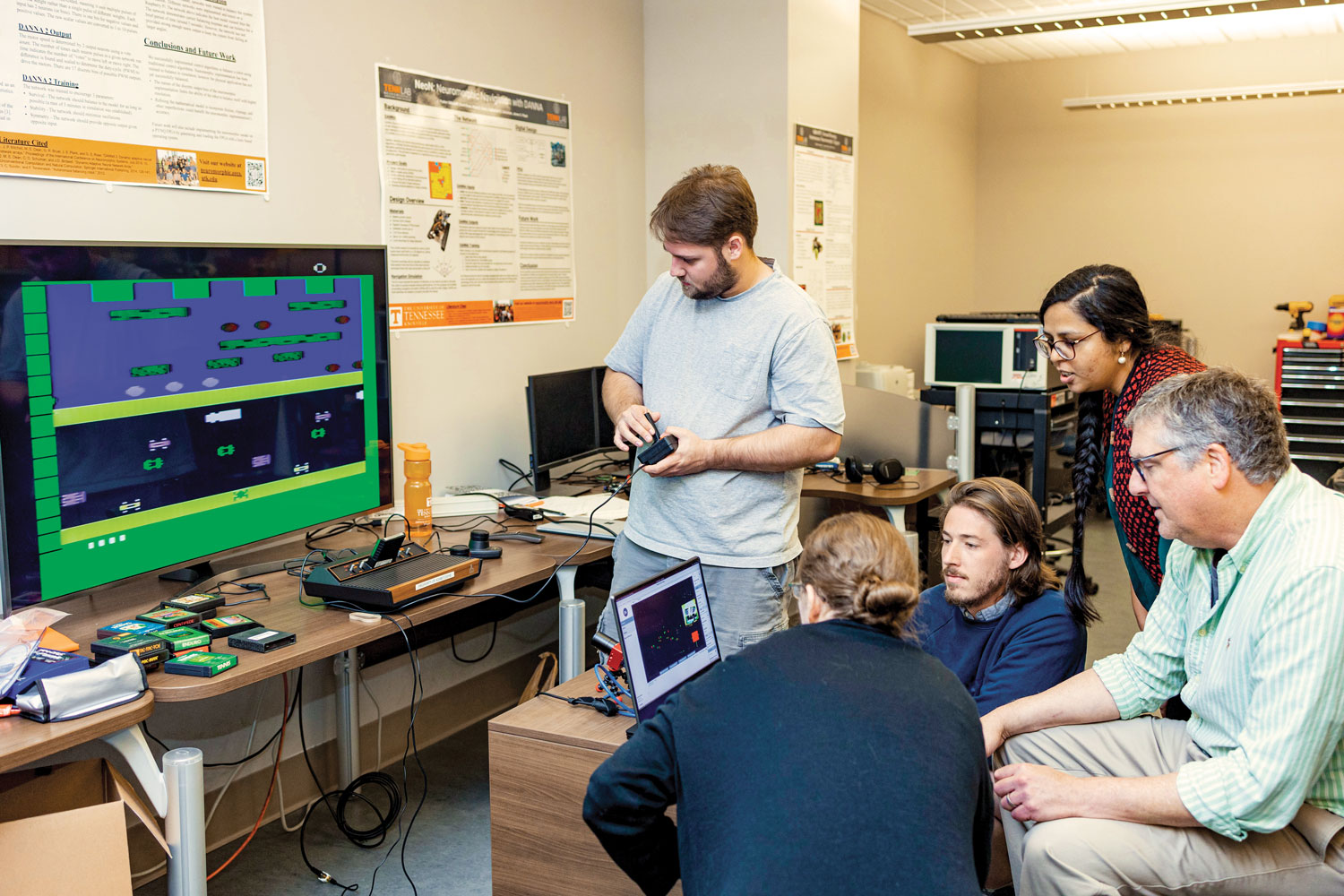

As an example, the team set up a camera to monitor a simulation of an old-school Pac-Man game from the Atari 2600—with every chomp, power pellet eaten, and ghost killed showing up on the screen as a series of events indicated by dots.

The system worked, even in the most rudimentary of backgrounds.

“With a high-speed lens you get less resolution but much more data,” said Schuman. “We’re in the stage of now building a more robust demo to help bring this technology to scale.”

The three UT researchers each bring their own piece of the puzzle to the table: Rose with his work on reconfigurable circuitry and interfaces, Schuman with her work on machine learning and algorithms, and Plank tying both together with his knowledge of interfaces and algorithms as well as system integration. It’s the sort of teamwork that has made their research group, TENNLab, such an attractive partner to outside entities like Areté.

Their work has potential impact across a number of areas, from industrial production to sport science, where deviations from the norma can make a difference.

The current phase of the project began in August and will run two years.

In addition to four other people from Areté, the team includes seven students from UT, giving them hands-on research experience.